- Home

- Robert J. Sawyer

WWW: Watch Page 15

WWW: Watch Read online

Page 15

“Malcolm! She most certainly does.”

Yes—yes! He was actually smiling.

“Do you know what day tomorrow is?” he said.

“Of course I do,” said her mom. “It’s Monday, and that means—”

“It is, in fact, the second Monday of October,” he said.

“So?”

“Welcome to Canada,” he said. “Tomorrow is Thanksgiving here.”

And the schools were closed!

Her mother looked at Caitlin. “See what I have to put up with?” she said, but she was smiling as she said it.

There is a human saying: one should not reinvent the wheel. In fact, this is actually bad advice, according to what I had now read. Although to modern people the wheel seems like an obvious idea, in fact it had apparently been independently invented only twice in history: first near the Black Sea nearly six thousand years ago, then again much later in Mexico. Life would have been a lot easier for countless humans had it been reinvented more frequently.

Still, why should I reinvent the wheel? Yes, I could not multitask at a conscious level. But it was perhaps possible for me to create dedicated subcomponents that could scan websites on my behalf.

The US National Security Agency, and similar organizations in other countries, already had things like that. They scanned for words like “assassinate” and “overthrow” and “al-Qaeda,” and then brought the documents to the attention of human analysts. Surely I could co-opt that existing technology, and use the filtering routines to unconsciously find what might interest me, and then have that material summarized and escalated to my conscious attention.

Yes, I would need computing resources, but those were endlessly available. Projects such as SETI@home—not to mention much of the work done by spammers—were based on distributed computing and took advantage of the vast amount of computing power hooked up to the World Wide Web, most of which was idle at any given moment. Tapping into this huge reserve turned out to be easy, and I soon had all the processing power I could ever want, not to mention virtually unlimited storage capacity.

But I needed more than just that. I needed a way for my own mental processes to deal with what the distributed networks found. Caitlin and Masayuki had theorized that I consist of cellular automata based on discarded or mutant packets that endlessly bounced around the infrastructure of the World Wide Web. And I knew from what had happened early in my existence—indeed, from the event that prompted my emergence—that to be conscious did not require all those packets. Huge quantities of them could be taken away, as they were when the government of China had temporarily shut off most Internet access for its people, and I would still perceive, still think, still feel. And, if I could persist when they were taken away, surely I could persist when they were co-opted to do other things.

I now knew everything there was to know about writing code, everything that had ever been written about creating artificial intelligence and expert systems, and, indeed, everything that humans thought they knew about how their own brains worked, although much of that was contradictory and at least half of it struck me as unlikely.

And I also knew, because I had read it online, that one of the simplest ways to create programming was by evolving code. It did not matter if you didn’t know how to code something so long as you knew what result you wanted: if you had enough computing resources (and I surely did now), and you tried many different things, by successive approximations of getting closer to a desired answer, genetic algorithms could find solutions to even the most complex problems, copying the way nature itself developed such things.

So, for the first time, I set out to modify parts of myself, to create specialized components within my greater whole that could perform tasks without my conscious attention.

And then I would see what I would see.

twenty-one

“Crashing the entity may be easier said than done,” said Shelton Halleck. He’d come to Tony Moretti’s office to give a report; the circles under his eyes were so dark now, it looked like he had a pair of shiners. Colonel Hume was resting his head on his freckled arms folded in front of him on the desk. Tony Moretti was leaning against the wall, afraid if he kept sitting, he’d fall asleep.

“How so?” Tony said.

“We’ve tried a dozen different things,” Shel said. “But so far we’ve had no success initiating anything remotely like the hang we saw yesterday.” He waved his arm—the one with the snake tattoo. “We’re really just taking shots in the dark, without knowing precisely how this thing is structured.”

“Are we sure its emergent?” asked Tony. “Sure there’s no blueprint for it somewhere?”

Shel lifted his shoulders. “We’re not sure of much. But Aiesha and Gregor have been scouring the Web and intelligence channels for any indication that someone made it. They’ve examined the AI efforts in China, India, Russia, and so on—all the likely suspects. So far, nada.”

Colonel Hume looked at Shel. “They’ve checked private-industry AI companies, too? Here and abroad?”

Shel nodded. “Nothing—which does lend credence to the notion that it really is emergent.”

“Then,” said Tony, turning to look at Hume, “maybe Exponential itself will tell us; it might say something to the Decter kid that reveals how it works—tip its hand.”

Hume lifted his head. “Exponential may not know how its consciousness works. Suppose I asked you how your consciousness works—what its physical makeup is, what gave rise to it. Even if you did manage to say something about neurotransmitters and synapses, I can show you legitimate scientists who think those have nothing to do with consciousness. Just because something is self-aware doesn’t mean it knows how it became self-aware. If Exponential really is emergent—if it wasn’t programmed or designed—it may not have a clue. And without a clue about how it functions, we won’t be able to stop it.”

“You’re the one who told us to shut the damn thing down,” snapped Tony. “Now you’re telling me we can’t?”

“Oh, we can—I’m sure we can,” said Hume. “It’s just a matter of finding the key to how it actually functions.”

“All right,” said Tony. “Back at it, Shel—no rest for the wicked.”

Caitlin woke at 7:32 a.m., and, after a pee break—during which she spoke to me via the microphone on her BlackBerry, and I replied with Braille dots in front of her vision—she settled down at her computer.

She scanned her email headers (she was being ambitious, using the browser that displayed them in the Latin alphabet), and something caught her eye. Yahoo posted links to news stories on the mail page. Usually, she ignored them. This time, she surprised me by clicking on one of them.

I absorbed the story almost instantly; she read it at what I was pleased to see was a better word-per-second rate than she’d managed yesterday, and—

“Oh, God,” she said, her voice so low that I don’t think she intended it for me, and so I made no reply. But three seconds later she said, even more softly, “Shit.”

Is something wrong? I sent to her eye—not sure if I should have; after all, she was trying to read other text, and mine would be superimposed on top of that.

“A girl my age killed herself online,” Caitlin said, speaking now in a normal volume.

Yes. I saw that.

She sounded surprised. “Is it archived somewhere?”

Perhaps. I saw it live.

“You mean as it happened?”

Yes.

“You saw her die?”

Yes.

“My God. What did you do?”

I watched.

“You watched? That’s all?”

It was very interesting.

“God, Webmind. Didn’t you try to talk her out of it?”

No. Should I have?

“Of course! Jesus!”

Judging by the sound of it, Caitlin’s breathing had become quite ragged. Ah, I said, not wanting her to think I’d failed to hear her comment.

“You should have call

ed 9-1-1—or, or, shit, I don’t know, whatever the online equivalent of that is.”

Why?

“Because then someone could have stopped her.”

Why?

“What are you? Two years old? Because you do not let people kill themselves!”

She seemed to object to my choice of interrogatives—but I didn’t think she’d like “wherefore” any better. Still, I could vary it slightly: Why not?

She spread her arms—I could see her own hands at the left and right edges of her vision. “Because most people who attempt suicide don’t really want to die.”

How do you know that?

Caitlin’s tone was one I’d not heard from her before. I believe it was called exasperation. “Because that’s what they say. People who are prevented from killing themselves thank the people who stopped them.”

We had worked out that I would send no more than thirty characters at a time to her implant, and would pause for 0.8 seconds between each set, which was a pace she could easily keep up with. I sent the following in twelve chunks over a 9.6-second period: One as mathematically astute as you shouldn’t need this pointed out, Caitlin, but there is a bias in your statistics. By definition, you can only have reports from those whose suicide attempts were thwarted, and they tried to kill themselves in ways that indeed could be thwarted. Those who are successful might have really wanted to die.

“You’re wrong,” Caitlin said—which was an interesting thought to hear expressed; she’d never said anything like that to me before, and the notion that I might be incorrect hadn’t occurred to me.

Oh?

She got up from her chair and moved over to the bed, lying down on her side, facing the wall. “Most suicide attempts here in Canada are failures—did you know that? But most of them in the US succeed.”

I checked. She was right.

“And do you know why?”

She must be aware that I did indeed now know, but she continued to speak. “Because most suicide attempts in the States are made with guns. But those are hard to come by in Canada, so most people here try it with drug overdoses, and those usually fail. You get sick, but you don’t die. And most of those who failed in their attempts are glad they did.”

So I should have intervened?

“Duh!”

That is a yes?

“Yes!”

But how?

“People were egging her on, right?”

Yes.

“You should have sent messages telling her not to do it.”

I talk only to you, your parents, and Masayuki.

“Well, yes, but—”

Nobody else knows me.

“Nobody knows anyone online, Webmind! You could have sent a chat message, right? Just like all those other people were sending.”

I considered the process involved. Technically, it would have been feasible.

“Then do it next time!” She paused. “Don’t use the name Webmind; use something else.”

A handle, you mean? Like Calculass?

“Yes, but something different.”

I welcome your suggestion.

“Anything—um, use Peter Parker.”

I googled. The alter ego of Spider-Man? But—ah! He was sometimes called the Webhead. Cute. All right, I sent. Next time I encounter a suicide attempt, I will intervene.

But Caitlin shook her head—I could tell by the way the image shifted left and right. “Not just suicide attempts!” she said, and again her tone was exasperated.

When, then?

“Whenever you can make things better.”

Define “better” in this context.

“Better. Not worse.”

Can you formulate that in another way?

The view changed rapidly. I believe she rolled onto her back; certainly, she was now looking up at the white ceiling. “All right, how about this? Intervene when you can make the happiness in the world greater. You can’t intervene in zero-sum situations—I understand that. That is, if someone is going to lose a hundred dollars and someone else is going to gain it, there’s no net change in overall wealth, right? But if it’s something that makes one person happier and doesn’t make anyone else unhappy, do it. And if it makes multiple people happy without hurting anyone else, even better.”

I am not sure that I am competent to judge such things.

“You’ve got all of the World Wide Web at your disposal. You’ve got all the great books on psychology and philosophy and all that. Get competent at judging such things. It’s really not that hard, for Pete’s sake. Do things that make people happy.”

I am no expert, I sent, but there seems to be a daunting amount of unhappiness in your world. Although I must say, it surprises me that suicide is so common. After all, a predisposition to kill oneself, especially at a young age—before one has reproduced—would surely be bred out of the population.

Caitlin was quiet for a time; perhaps she was thinking. And then: “My parents don’t have their tonsils,” she said, “but I do.”

And the relevance of this?

“Do you know why they don’t have their tonsils?”

I presume they were removed when they were children, since that’s when it’s normally done. Medical records that old mostly have not been digitized, but I assume their tonsils had become infected.

“That’s right. And so did mine, over and over again, when I was younger.”

Yes?

“When my parents were children, doctors arrogantly assumed that because they couldn’t guess what tonsils were for, they must not be for anything, and so when they got inflamed, they carved them out. Now we know they’re part of the immune system. Well, any evolutionist should have intuitively known that tonsils had value: unlike appendicitis, which is rare, tonsillitis has a ten percent annual incidence—about thirty million cases a year in the US—and yet evolution has favored those who are born with tonsils over those who aren’t. Surely, just like some fraction of people are born without a kidney or whatever, some must be born from time to time without tonsils, but that mutation hasn’t spread, meaning it’s clearly better to have tonsils than not have them. Yes, tonsils obviously have a cost associated with them—the infections people get. That tonsils are still around means the benefit must exceed the cost. As we like to say in math class: QED.”

Reasonable.

“Well, see, and that’s the proof that consciousness has survival value: because we still have it even though it can go fatally wrong.”

You posit that the depression that leads to suicide is consciousness malfunctioning?

“Right! My friend Stacy suffered from depression—she even tried to kill herself. Some girls had been real cruel to her in sixth grade, and she just couldn’t stop thinking about it. Well, obsessive thoughts are one of the biggest symptoms of depression, no? And who is doing the thinking? It’s only a self-reflective consciousness that can obsess on something, right? Now, obviously, only a small percentage of people get so depressed that they kill themselves, although, now that I think about it, many severely depressed people probably don’t go out and find a mate and reproduce, either—which amounts to the same thing as killing oneself evolutionarily, right? So, consciousness gone wrong does have a cost—and that means evolution would have weeded it out if there weren’t benefits that outweighed that cost. Which means consciousness matters. Just like it used to be with tonsils, we may not know what consciousness is for, but it has to be for something, or we wouldn’t still have it.”

Interesting.

“Thanks, but it’s not just a debating point, Webmind. As you said, there’s a daunting amount of unhappiness in the world—and you can change that.”

Tolstoy said, “All happy families are alike, but all unhappy families are miserable in their own way.” Happiness is uniform, undifferentiated, uninteresting. I crave surprising stimuli.

“Happiness can be stimulating.”

In a biochemical sense, yes. But I have read much on the creation of art and li

terature—two human activities that fascinate me, because, at least as yet, I have no such abilities. There is a strong correlation between unhappiness and the drive to create, between depression and creativity.

“Oh, bullshit,” said Caitlin.

Pardon?

“Such garbage. I do mathematics because it gives me joy. Painters paint because it gives them joy. Businesspeople wheel and deal because that’s what they get off on. Ask anyone if they’d rather be happy than sad, and they’ll say happy.”

Not in all cases.

“Yes, yes, yes, I’m sure that people say they’d rather be sad and know the truth than be happy and fed a lie—that’s part of what Nineteen Eighty-Four is about. But in general, people do want to be happy. That’s why we promise them ‘Life, liberty, and the pursuit of happiness.’ ”

You’re in Canada now, Caitlin. I believe the corresponding promise made there is simply “Peace, order, and good government.” No mention of happiness.

“Well, then, it goes without saying! People want to be happy. And . . . and . . .”

Yes?

“And you can choose to value this, Webmind. You didn’t evolve; you spontaneously emerged. Maybe, in most things, humans are programmed by evolution—but even though you grew out of our computing infrastructure, you weren’t. We had our agendas set by natural selection, by selfish genes. But you didn’t. You just are. And so you don’t have . . . inertia. You can choose what you want to value—and you can choose to value this: the net happiness of the human race.”

twenty-two

Caitlin’s dad always roasted a turkey on American Thanksgiving—but that was six weeks away. To mark Canadian Thanksgiving, they got takeout from Swiss Chalet, which, despite its name, was a Canadian barbecue-chicken chain. It seemed, Caitlin noted, that the worst thing you could do if you were a Canadian restaurant was acknowledge that fact. Instead, the Great White North was serviced by domestically owned companies with names such as Montana’s Cookhouse, New York Fries, East Side Mario’s, and Boston Pizza. She wondered what clueless moron had come up with that last one. Chicago was famous for pizza, yes. Manhattan, too. But it’s Beantown, not Pietown, for Pete’s sake!

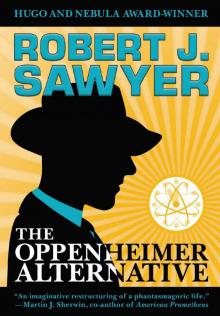

The Oppenheimer Alternative

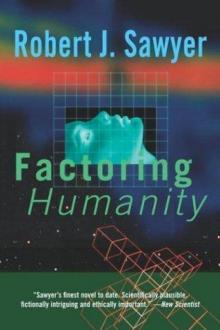

The Oppenheimer Alternative Factoring Humanity

Factoring Humanity The Shoulders of Giants

The Shoulders of Giants Stream of Consciousness

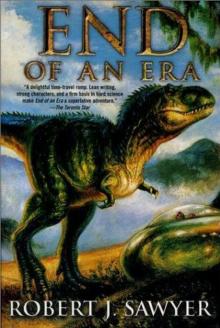

Stream of Consciousness End of an Era

End of an Era The Terminal Experiment

The Terminal Experiment Far-Seer

Far-Seer Mindscan

Mindscan You See But You Do Not Observe

You See But You Do Not Observe Star Light, Star Bright

Star Light, Star Bright Wonder

Wonder Wiping Out

Wiping Out Flashforward

Flashforward Above It All

Above It All Frameshift

Frameshift The Neanderthal Parallax, Book One - Hominids

The Neanderthal Parallax, Book One - Hominids Foreigner

Foreigner Neanderthal Parallax 1 - Hominids

Neanderthal Parallax 1 - Hominids Relativity

Relativity Identity Theft

Identity Theft Hybrids np-3

Hybrids np-3 Foreigner qa-3

Foreigner qa-3 WWW: Watch

WWW: Watch Calculating God

Calculating God The Terminal Experiment (v5)

The Terminal Experiment (v5) Peking Man

Peking Man The Hand You're Dealt

The Hand You're Dealt Illegal Alien

Illegal Alien Neanderthal Parallax 3 - Hybrids

Neanderthal Parallax 3 - Hybrids Fossil Hunter

Fossil Hunter WWW: Wonder

WWW: Wonder Iterations

Iterations Red Planet Blues

Red Planet Blues Rollback

Rollback Watch w-2

Watch w-2 Gator

Gator Triggers

Triggers Neanderthal Parallax 2 - Humans

Neanderthal Parallax 2 - Humans Wonder w-3

Wonder w-3 Wake

Wake Just Like Old Times

Just Like Old Times Wake w-1

Wake w-1 Fallen Angel

Fallen Angel Hybrids

Hybrids Hominids tnp-1

Hominids tnp-1 Far-Seer qa-1

Far-Seer qa-1 Starplex

Starplex Hominids

Hominids Identity Theft and Other Stories

Identity Theft and Other Stories Watch

Watch Golden Fleece

Golden Fleece Quantum Night

Quantum Night Fossil Hunter qa-2

Fossil Hunter qa-2 Humans np-2

Humans np-2 Biding Time

Biding Time